The implications of AI in nuclear decision-making

The integration of AI into nuclear command and control systems is fraught with risk. Current AI systems display four systemic risks: they are often unreliable, opaque, susceptible to cyber threats and misaligned with human values. Therefore, it is imperative to better understand and categorize these risks and act with caution when integrating AI in nuclear decision-making processes.

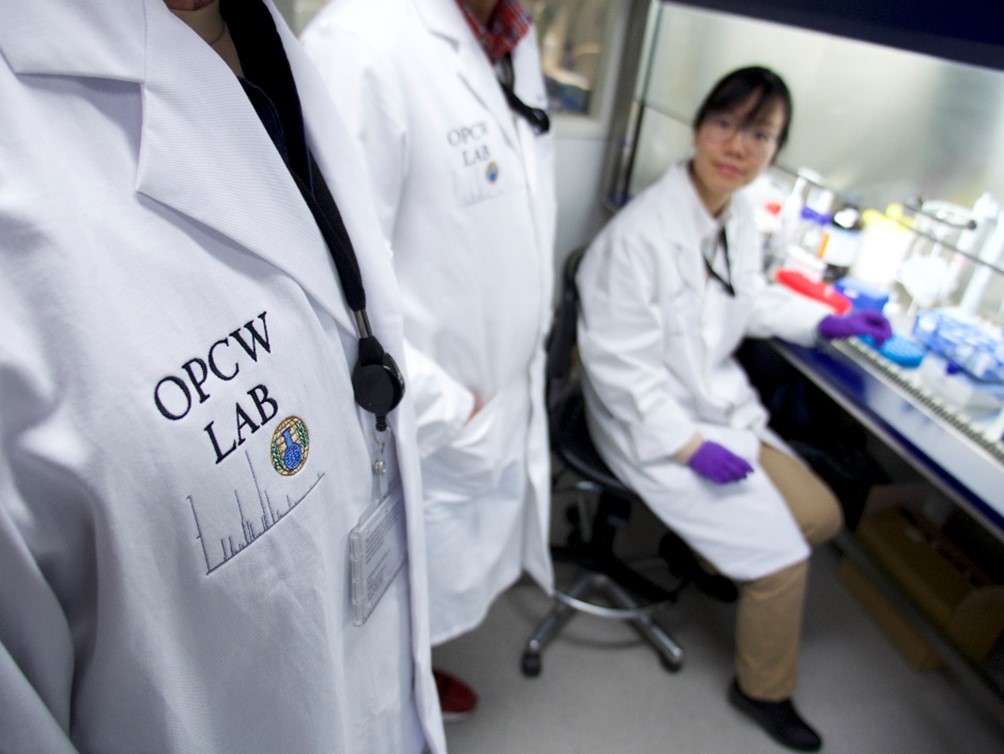

“Killer AI” and chemical weapons development: How real is the risk? How the OPCW could adapt

The emergence of AI will impact both the Chemical Weapons Convention as well as its implementing organization, the OPCW.

Molecular design with generative artificial intelligence

Generative AI is emerging as a cornerstone for medicinal drug discovery, offering a rich source of inspiration for pharmaceutical research. AI models can be calibrated to generate potential therapeutics, but also potentially harmful molecules, necessitating responsible ethical oversight.

Artificial Intelligence and Biological Weapons

Generative artificial intelligence (AI) is transforming the life sciences. The intersection of AI and biology might facilitate the deliberate use of bacteria and viruses to inflict harm, but the extent to which the threat of biological weapons will change as a result of AI is still unclear.

The Manifold Implications of the AI-Nuclear Nexus

The integration of artificial intelligence into nuclear weapons systems will affect both military capabilities and human behaviour, especially with respect to nuclear decision-making. Whether nuclear command and control systems will be more or less safe, secure and reliably in the age of AI is still a matter of debate among experts.

The fast and the deadly: When Artificial Intelligence meets Weapons of Mass Destruction

Artificial intelligence will have a significant impact on the development, manufacturing and control of weapons of mass destruction. It is therefore prudent to slow down the integration of AI into the field of biological, chemical and nuclear weapons, before the associated risks are not fully understood.